Making AI Less of a Black Box for Doctors

AI Literacy for Clinicians

The Challenge

Doctors are trained to ask a fundamental question before using any treatment: "How does this work?"

In medicine, this is the mechanism of action (MOA). If a clinician understands why something works, where it fails, and what assumptions it relies on, they can use it safely and appropriately.

AI and machine learning tools increasingly influence diagnosis, triage, imaging, risk prediction, and treatment recommendations. But for many clinicians, AI feels different from every other tool they use:

- It's opaque

- It's statistical, not causal

- It adapts over time

- Its failures can be subtle and biased

For good reason, many doctors are hesitant. They don't understand:

- What the model is actually "learning"

- Why training matters

- What kinds of mistakes it's prone to

- When confidence is earned vs misleading

- Why bias appears, and how data choices create it

This lack of a mental model slows adoption, erodes trust, and creates misuse.

The Big Idea

Medicine already uses games to explain complex systems. MOA games turn invisible biological processes (binding, inhibition, pathways) into interactive mechanics doctors can reason about.

We're asking the same question for AI:

Can a well-designed game help clinicians build an intuitive mental model of how AI works: its strengths, limits, and failure modes?

Games are uniquely good at:

- Turning abstract systems into visible feedback

- Showing gradual improvement through iteration

- Making tradeoffs tangible

- Letting players cause errors, not just read about them

What the Game Should Help Doctors Understand

Not math. Not coding. Conceptual understanding through play.

Key ideas to explore:

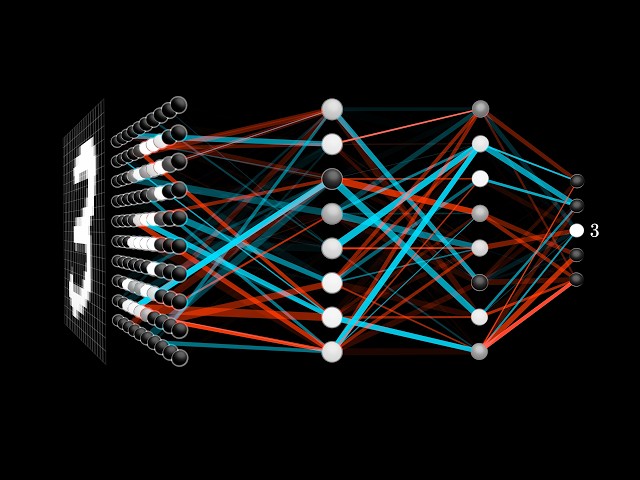

- What artificial neural networks are (and aren't)

- How they differ from biological neurons doctors already understand

- How training shapes behavior

- Why data quality, balance, and labeling matter

- The difference between training data and validation data

- Why overfitting feels "confident but wrong"

- How bias emerges from data, not intent

- What AI is good at vs dangerously bad at

Core Gameplay Directions

These are starting points, not constraints.

1. Train-the-Network Games

Players feed examples into a simple neural network and watch it change:

- Visualize weights strengthening and weakening

- See performance improve (and sometimes degrade)

- Experience what happens when data is skewed, sparse, or noisy

- Compare outcomes using different datasets (medical or non-medical)

Reward comes from understanding, not perfection.

2. Dataset Curation Challenges

Players don't tweak the model. They curate the data:

- Decide what examples to include or exclude

- Balance populations

- Choose labels

- Hold back validation data

Then watch how the same model behaves very differently depending on those choices.

3. Failure Mode Discovery

Instead of "winning," players are asked to:

- Stress the system

- Find where it breaks

- Trigger confident-but-wrong predictions

- Learn when not to trust the output

Success is recognizing limitations.

What Makes This Fun for Game Developers

This is a systems-design playground:

- Feedback loops

- Emergent behavior

- Tradeoffs and unintended consequences

- Visualizing invisible processes

- Turning statistics into motion, flow, and change

There's no need for realism-heavy graphics. Clarity and elegance matter more than fidelity.

Target Audience

- Physicians and residents

- Medical students

- Clinicians encountering AI-enabled tools in practice

- No coding or math background assumed

Jam-Appropriate Scope

- Small, interpretable models

- Simple classification or pattern-recognition tasks

- Stylized representations of networks and data

- Focus on intuition, not technical accuracy at scale

Mocking complexity is encouraged. Clarity beats completeness.

What This Is Not

- Not a coding tutorial

- Not an AI certification course

- Not a sales demo for AI tools

- Not claiming AI "thinks like humans"

The goal is conceptual literacy, not expertise.

Why This Matters

As AI becomes embedded in clinical workflows, understanding how it behaves becomes a safety issue, not a technical curiosity.

This project asks game developers to help solve a trust gap in healthcare: by giving clinicians a way to play with the system before they're asked to rely on it.

Strong projects may receive visibility and follow-on interest through Sheba ARC and its broader healthcare innovation ecosystem.